나는 컴퓨터 장사를 했다.

사업자 등록을 내고 정식으로 한건 아니고

동네에서 버리고싶은데 귀찮아서 쳐박아뒀던 컴퓨터들을 한대씩 수거해오며

프랑켄슈타인을 만들어 되팔렘을 시작한게 계기였던 액티비티였다.

(대표적으로 775소켓 보드에 L5420 개조제온을 박아 고스돕 콤퓨타를 팔게 된 것이 시초)

픽업트럭 짐칸과 2열까지 꽉꽉채워서

먼지먹고 반 이상은 부팅조차 안되는 고물들을 싣고오는 날이면

마크업 200 ~ 300%의 고수익이 실현되기를 바라며

한국전력의 VVIP가 되던 날들이었다.

그러다보니 남는 하드웨어 (램슬롯이 하나 맛탱이 가거나, 바이오스 개조에 실패하거나 등등)가 많아졌고

학생때 간간히 써보던 리눅스를 올려 쓸데없는 서비스를 하나하나 올리기 시작하였던 것이

지금의 화이트해킹의 밥벌이까지 이어지게 되었다.

매출 1500만원, 순이익 1000만원의 짭쪼름한 시절

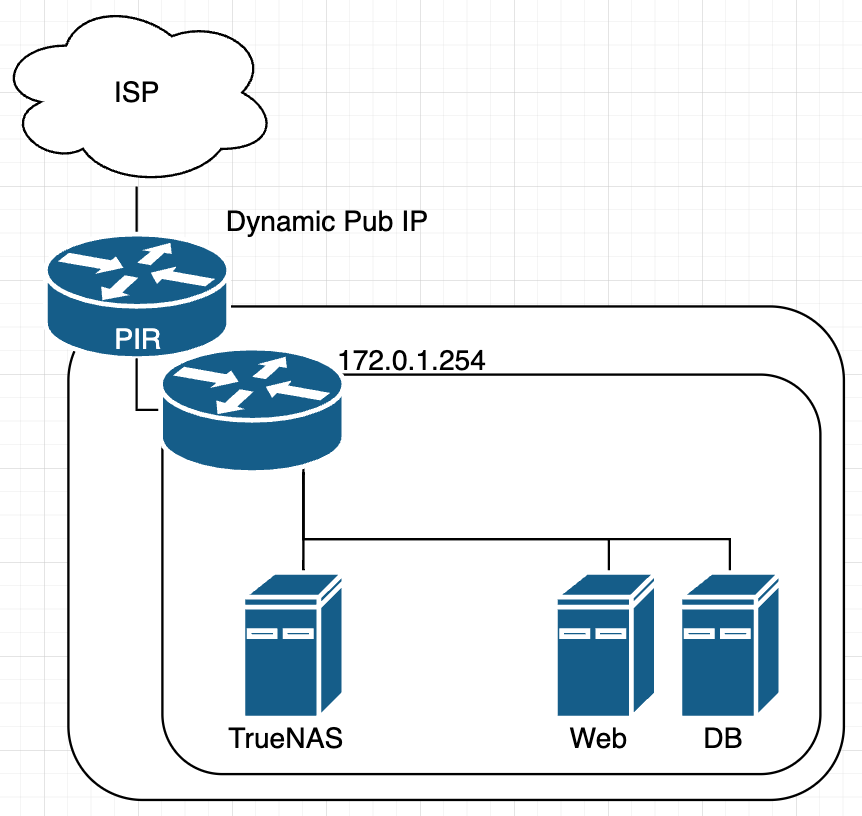

현찰이 충분했던 나는 2CPU에서 엔트리급 서버 하드웨어를 모으기 시작했다.

DDR2 ~ DDR3 일반 하드웨어는 신뢰도가 너무 낮았고, 데이터오염이 심했기 때문이다.

씨바 족같은 일 하기 싫다고 회사를 그만두고

취업을 준비하던 기간이 너무 너무 늘어지다보니

집에서 굴리던 서버, 서비스들은 거의 다 내려버리게 되었다.

지금은 서버 네트워크 하단에 파일서버 하나만 덩그러니...

일반 네트워크 망에 물려둔 VPN 서버, 계정 생성때만 잠깐 켜는 CA서버까지

지금 세대만 운용중이고 나머지 하드웨어는 먼지만 쌓여가면서 낡아가는 것이 지금 상황이다.

예전에 JSP로 (졸업 몇년 전부터 Java를 집중적으로 팠기 떄문에 JSP를 골랐다)

서비스를 구축해서 사용했는데,

외부에 서비스를 열지 않았고 바이러스에 감염되어본 적이 한 번도 없었음에도

보안측면에서 상당히 위험하게 운용했던 기억이 난다.

해당 소스코드는 남아있긴 하다만

JSP와 멀어져버린지 너무 오래된 지금 시점,

차라리 어셈블리어나 C99 언어로 짜라면 짜겠다만 자바는 이제 낮선 언어가 되어버려 되살리기가 어렵다.

(+ 당시 설계때 작성했던 문서 일부가 유실되었고, 남은건 봐도 잘 모르겠다)

취업도 했겠다,

집에서 쓸 서비스를 다시 만들어 올리기로

추석때 개뜬금없이 결정하였다.

이 과정을 내 블로그에 기록하기로 한다.

모든걸 보안 중심으로 설계/구축/운용할 예정이므로

일반 프론트/백엔드/풀스택 개발자, 오퍼레이터, 데브옵스 개발자들이 혹여나 참고할만한 점이 있...을까? 의문이 든다.

ㅋㅋㅋ

0. PDCA - P

PDCA-ception 과정을 통해

워터폴과 애자일을 반쯤 섞은 계획을 세울 예정이다.

(애자폴..ㅋㅋ)

결국 주먹구구식이 될게 뻔하다만

계획을 세워놔야... 막상 작업할 때 편하다.

1. 요구사항 분석

일단 지금 필요한 서비스는 다음과 같다:

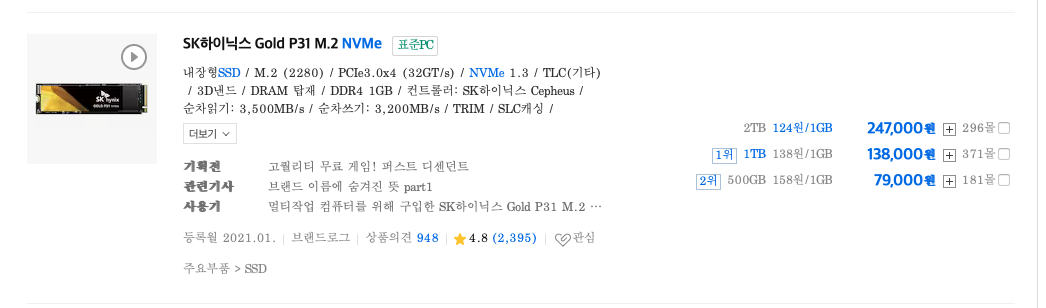

1.A. 자산관리 시스템 (Asset Management System, AMS)

물건을 사면 영수증관리, 보증기간 관리, 구매처 정보 관리, 배치현황 (회사, 집, 친구, ...) 같은걸 관리해야한다. 특히 전자장비 AS 신청할 때마다 일일이 찾는 것도 번거롭고... 이 시스템이 전에 JSP로 구축해 썼는데 정말 편했다. 구매 영수증 PDF로 업로드해놓고, 보증기간 입력해놓고 하면 검색하기도 편하고, 폐기처리도 편하고... 이건 전에 모 기업에서 네트워크 운영할 떄 ITMS 서비스를 쓰면서 이거 참 편하구만 했던 기능이었다.

1.B. 차계부 (Vehicle Service History Management System, VeSH)

내가 소유한 차만 벌써 세번째이다. 지금껏 프린트해서 손으로 적고 있으나, 언제 어디서든 확인할 수 없다는게 넘 구찮다. 이를 전산화하고싶다.

1.C. 금융 & 세금관리 시스템 (Financial Briefer, FBi)

보험은 뭘 들었는지, 예상 원천징수액은 얼마고 세금감면액은 어떤 조항이 어떻게 들어가는지 등을 엑셀로 정리하고 있다. 너무 구찮다. 전산화 하자.

1.D. 서비스 통합 시스템 (ERP like Landing Page, ELP)

상기 기술한 모든 서비스들을 하나의 랜딩페이지에서 사용할 수 있도록 묶어주는 페이지이다. 또한 관리자 권한으로 서비스를 운영하고, 로그인 체계나 정보접근이력 등을 관리하는 기능을 가져야한다. 특히 DB의 경우 하나의 서버에 다 때려박는게 아니라, 금융/차량/자산 등 종류별로 서버를 달리할 예정이라 이를 통합관리할 수 있어야하고, 로깅을 남겨서 계정을 탈취당하더라도 지울 수 없는 로그를 남겨서 추후 침입흔적 등을 확인할 수 있어야 한다.

또 구축할만한게 뭐가 있을까...

2. 기능 분석 및 방법론 정립

2.0 네트워크 + 서버 HW 아키텍쳐

일단 개략적인 구축은 이렇게 할 예정이다.

3티어 (웹 와스 디비)를 제하면 이미 구축이 되어있는 상황이다.

굳이 저렇게 망분리를 한 이유는

https://thewanderer.tistory.com/94

개인 서버 망분리 고민 log (Feat. NAT & Sniffing) - 작업중

(글 수정 중, 아래 실제 글과 목차 괴리 있음)목차0. 개요1. 망분리 정의2. 망분리의 근거 2.A. 기업의 망분리 2.B. 가정의 망분리3. 망분리 중/후 보안 강화 요소 1.A. NAT 1.B. Broadcast Domain & Snif

thewanderer.tistory.com

이건데 글 완성을 못했다.

ㅋㅋ...

에어갭이 중요하다. 망분리.

지피티에게도 하소연을 했다만 제트나 (Z(e)TNA, Zero Trust Network Architecture)를

집에서 구축하는건 말이 안된다.

SSO조차 필요가 없는게 가정용 네트워크인데...

지금 서버 네트워크의 경우 고정 IP로 각 호스트별 아이피를 예측할 수 있게 할당해놨고

각 서비스는 포트포워딩으로 매핑해뒀다.

중요한건 관리용 SSH연결 및 호스트간 통신인데,

클라우드 컴퓨팅에서 VNET을 나누는 것처럼

호스트간 통신을 극도로 제한해둬야 그나마 좀 더 안전해진다.

그림이 좀 이상하긴 하다만

서버 네트워크 라우터, Server Intranet Router - 이하 SIR,의 DHCP 서버에서 IP를 관리한다고 가정하자.

이 경우;

- 웹 페이지 접속 요청 패킷은 방화벽에게 전달된다.

- HTTP 요청일 경우에 한하여 요청을 웹서비스에게 전달해준다.

(여기서 HTTP는 HTTPS의 TLS 암호화 부분은 제하고 웹 요청을 의미한다)

- 웹 서버는 동적 페이지 요청을 WAF에게 보낸다. (프록싱)

- WAF는 정상 요청에 한하여 요청을 WAS에게 전달해준다.

- WAS는 DB 접속이 필요할 경우 DB서버에게 쿼리를 요청한다.

- Bastion Host를 두고, SSH 연결은 배스쳔 호스트를 통해 접속한다.

- DB는 WAS쪽에서 보내는 인바운드 패킷 + 배스쳔 호스트의 SSH 접속요청 패킷 외 모든 트래픽을 차단당한다.

(라우터 측에서 1차로 인바운드를 차단하고, 서버측 라우팅 테이블에서 Null로 보내버려서 아웃바운드를 2차로 차단한다.)

-

...

그럼 이걸 실물로 구현하려면

- SIR에서 출발 IP 10.0.0.0/26, 도착 10.0.0.0/26 패킷을 모두 폐기한다.

(Deny All 정책, 라우팅 테이블에서 Null로 보내버림)

- 업데이트를 허용하기 위해 PIR (Public Intranet Router)에서는 별도로 트래픽을 차단하지 않는다.

- SIR에서 포트포워딩할 것은 아래와 같다 (3 티어와 관계없는 포트는 생략한다)

SSH (PIR CIDR -> Bastion)

HTTPS (PIR CIDR -> Firewall 또는 Web 서버)

지금 F/W이랑 WAF도 어떤 미들웨어를 써야하는지가 문제다.

단순 JSP나 PHP로 돌려서 Apache나 Nginx를 쓰게되면

해당 미들웨어에 붙여 쓸 수 있는 WAF를 쓰면 된다만 (별도로 서버를 구축할 필요 없음)

일단 무슨 언어/프레임워크를 쓸지에 따라 달라지는 부분이라, 일단은 미정이다.

그럼 네트워크는 SSL (Server Side Language)랑 프레임워크가 정해지게되면

그 다음에 그림을 더 그리는 것으로 하자.

여기까지의 다이어그램

2.1 SSL + Framework 선정

지피티 컨설턴트와 상의한 내용을 접은글로 추가한다

(지피티 채팅 내용은 어마어마하게 길기 때문에 주의할 것)

필자

I have experience with: JSP dev (four years of enterprise level), ASP hacking (merely wrote web shell but basically no experience on development), PHP for basic dev (for building an demo website for penetration training purpose, not the service operation). What server side language do you suggest for:

- robust service

- fast dev and const. changing architecture (since I'll be the sole dev-sec-ops member and the demand of service may change in the future) → stiff or lean learning curve does not matter since I can handle, I've got experience of programming from vHDL, C, C++, Java, Python, Web with JSP, R, VB and more.

- If there's well known and widely used framework with a specific language, consider (e.g. Spring boot, though I've got no experiece with any framework like word press and so on.)

- Unlike PHP or ASP (even they do), many server side language requires dedicated WAS middleware s.a. tomcat or jeus. If available for free, consider those middlewares.

- I'm considering MariaDB but if there are other considerable options (e.g. mySQL) that are free, please do.

피티

Given your experience and requirements, here are some server side language options and their associated frameworks and middleware, along with their fit for your needs:

**1. Java with Spring Boot

Pros:

• Robustness: Spring Boot is widely used for enterprise level applications and provides a robust framework for building scalable and maintainable services.

• Flexibility: Spring Boot’s microservices architecture is adaptable to changing requirements, making it suitable for evolving service demands.

• Framework: Spring Boot simplifies development with built in support for various functionalities (e.g., security, data access, messaging).

• Free Middleware: Apache Tomcat is a popular open-source servlet container often used with Spring Boot.

Cons:

• Learning Curve: Spring Boot has a learning curve, but with your Java experience, this should be manageable.

Use Case: Ideal for building complex and scalable applications with a well-supported framework.

**2. Python with Django or Flask

Django:

• Robustness: Django is a high level framework that emphasizes rapid development and clean design, with many built-in features (e.g., authentication, ORM).

• Framework: Django is known for its “batteries included” approach, offering a comprehensive set of tools for building web applications.

• Free Middleware: Django comes with its own lightweight web server, and you can use it with other servers like Gunicorn or uWSGI.

Flask:

• Flexibility: Flask is a micro framework that provides more control over components, allowing you to build services tailored to specific needs.

• Framework: Flask is minimalistic and provides flexibility for integrating with various libraries and tools.

Cons:

• Framework vs Flexibility: Django might be too opinionated if you need a lean setup, while Flask requires more manual integration for features.

Use Case: Ideal for rapid development and prototyping, with Django offering more built-in features and Flask providing more flexibility.

**3. Node.js with Express

Pros:

• Fast Development: Node.js is known for its non-blocking I/O and speed, and Express is a minimal and flexible web application framework.

• Flexibility: Express allows for rapid development and easy integration with various tools and databases.

• Free Middleware: Node.js applications are often deployed using free and open-source servers like Nginx.

Cons:

• Single Threaded: While Node.js handles I/O operations efficiently, it might not be suitable for CPU bound tasks.

Use Case: Suitable for building scalable, real-time applications with a focus on fast development and flexibility.

**4. Ruby with Ruby on Rails

Pros:

• Rapid Development: Ruby on Rails is known for its convention-over-configuration approach, which speeds up development.

• Framework: Rails provides a comprehensive framework with built-in tools and best practices for building web applications.

• Free Middleware: Rails applications are commonly deployed with free servers like Puma or Passenger.

Cons:

• Performance: Rails applications may have performance concerns at scale, but this can often be mitigated with proper optimization and scaling strategies.

Use Case: Ideal for rapid development with a focus on convention and productivity.

Database Options:

• MariaDB: A fork of MySQL with additional features and open-source licensing. It’s a strong choice if you’re already considering it.

• MySQL: Widely used and well-supported, also open-source. Suitable for many web applications.

• PostgreSQL: Known for its advanced features and robustness, it’s a strong alternative to MariaDB and MySQL.

Recommendation:

Based on your experience and needs:

• For robustness and scalability: Java with Spring Boot is a solid choice, especially if you’re comfortable with Java and looking for a feature-rich framework.

• For rapid development and flexibility: Python with Django or Flask would be excellent choices, with Django offering more built-in features and Flask providing more flexibility.

• For modern, scalable applications: Node.js with Express is a good option if you’re looking for a JavaScript-based solution with fast development cycles.

Each option has its strengths, so your choice will depend on which factors are most important for your current and future projects.

필자

For each options given, list up the free middlewares.

피티

Here’s a list of free middleware options for each of the server side languages and frameworks mentioned:

1. Java with Spring Boot

• Apache Tomcat: A widely used open source servlet container and web server for running Java applications. It’s commonly used with Spring Boot.

• Jetty: Another open source servlet container that is lightweight and often used for embedded or stand-alone applications.

• WildFly (formerly JBoss): An open source Java EE application server that can be used to run enterprise Java applications, including those built with Spring Boot.

• Apache Karaf: A modular and flexible OSGi container that can be used for deploying and managing Java applications.

2. Python with Django or Flask

• Gunicorn (Green Unicorn): A pre fork worker model for handling Python web applications. It’s commonly used with Django and Flask for serving applications.

• uWSGI: A versatile application server that can serve Django or Flask applications and supports various protocols.

• Daphne: An HTTP, HTTP2, and WebSocket protocol server for ASGI and Django Channels, often used with Django for handling asynchronous protocols.

• Waitress: A production-quality pure-Python WSGI server with multi threaded capabilities, suitable for serving Django or Flask applications.

3. Node.js with Express

• Nginx: While primarily a web server, Nginx can also act as a reverse proxy for Node.js applications, handling load balancing, and caching.

• PM2: A process manager for Node.js applications that can handle clustering, monitoring, and logging.

• Forever: A simple command-line tool for ensuring that a Node.js script runs continuously.

• http-server: A simple, zero-configuration command line HTTP server for Node.js, often used for development and testing purposes.

4. Ruby with Ruby on Rails

• Puma: A high performance HTTP server for Ruby applications, designed to handle multiple concurrent requests.

• Passenger (Phusion Passenger): An application server for Ruby, Python, and Node.js applications, known for its ease of use and integration with web servers like Nginx and Apache.

• Unicorn: A multi process HTTP server for Ruby applications, known for its reliability and support for multiple concurrent requests.

• WEBrick: A basic Ruby HTTP server included in the Ruby standard library, suitable for development and testing.

Database Options:

• MariaDB: A free and open source database server, a drop-in replacement for MySQL with additional features.

• MySQL Community Edition: The free and open-source version of MySQL, widely used and well supported.

• PostgreSQL: An advanced, open-source relational database known for its robustness and extensibility.

These middleware options are well regarded in their respective ecosystems and should work effectively with the corresponding server-side languages and frameworks for your LAPM stack.

필자

it does not need to be LAPM but I am going to use Linux, specificaly Ubuntu or CentOS Stream, preferably Ubuntu Server. Does it change your answer

피티

Using Ubuntu Server or CentOS Stream doesn’t fundamentally change the recommendations for server side languages and middleware, but it can influence your choices based on compatibility and ecosystem support. Here’s how the choice of operating system could affect the middleware and tools you use:

1. Java with Spring Boot

• Apache Tomcat: Fully supported on both Ubuntu Server and CentOS Stream. Installation and management are straightforward with package managers.

• Jetty: Also compatible with both distributions, with installation support through repositories or manual setup.

• WildFly: Works well on both Ubuntu Server and CentOS Stream, with official installation guides and community support.

• Apache Karaf: Supported on both distributions, with installation options via package managers or manual configuration.

2. Python with Django or Flask

• Gunicorn: Available for both Ubuntu Server and CentOS Stream, easily installable via package managers (pip for Python).

• uWSGI: Supported on both distributions, with packages available or installation via pip.

• Daphne: Compatible with Ubuntu Server and CentOS Stream, installation via pip.

• Waitress: Works on both operating systems, easily installed via pip.

3. Node.js with Express

• Nginx: Supported on both Ubuntu Server and CentOS Stream. It’s a versatile web server and reverse proxy, widely used for Node.js applications.

• PM2: Works on both distributions, installed via npm (Node.js package manager).

• Forever: Available for both operating systems, installed via npm.

• http-server: Also works on both, used mainly for development and testing, installed via npm.

4. Ruby with Ruby on Rails

• Puma: Fully supported on both Ubuntu Server and CentOS Stream, installation via RubyGems.

• Passenger: Compatible with both operating systems, with installation options for integration with Nginx or Apache.

• Unicorn: Works well on both distributions, installed via RubyGems.

• WEBrick: Included in the Ruby standard library, available on both operating systems.

Database Options:

• MariaDB: Supported on both Ubuntu Server and CentOS Stream. Installation is straightforward via package managers or official repositories.

• MySQL Community Edition: Available for both distributions, with easy installation through package managers or official repositories.

• PostgreSQL: Compatible with both Ubuntu Server and CentOS Stream, with installation support through package managers.

Summary

The choice between Ubuntu Server and CentOS Stream mainly affects package management and default repositories. Both operating systems are well supported and capable of running the recommended middleware and server side technologies efficiently.

• Ubuntu Server: Known for its user-friendly package management with apt and extensive community support.

• CentOS Stream: Uses yum or dnf for package management and is well-supported in enterprise environments.

Both options will support the middleware and frameworks discussed, so your choice can be based on personal preference, familiarity, or specific requirements for your environment.

필자

Could you search the web and find the market share of each options?

피티

Certainly! Here’s a summary of the market share for the server side technologies and operating systems mentioned:

1. Operating Systems

• Ubuntu Server:

• Market Share: Ubuntu Server is one of the most popular Linux distributions for servers. It holds a significant share in the market due to its ease of use, regular updates, and strong community support. As of recent estimates, Ubuntu Server holds around 30-40% of the Linux server market.

• CentOS Stream:

• Market Share: CentOS has traditionally been a popular choice for enterprise environments, but CentOS Stream, which is a rolling-release distribution, has seen a shift in its user base. CentOS Stream is now a bridge between Fedora and Red Hat Enterprise Linux (RHEL), and while its market share is growing, it is still less prevalent compared to traditional CentOS and Ubuntu Server. CentOS as a whole previously had a market share of about 10-15% but this has shifted with the introduction of CentOS Stream.

2. Server-Side Languages and Frameworks

• Java with Spring Boot:

• Market Share: Java is one of the most widely used languages in enterprise environments, and Spring Boot is a leading framework for building Java-based applications. Spring Boot is very popular, with a significant share in the enterprise Java ecosystem. Estimates suggest that Java holds around 30-40% of the programming language market in enterprise environments.

• Python with Django or Flask:

• Market Share: Python is a popular language for web development, and Django and Flask are two of the leading frameworks. Python’s popularity has been rising, and it holds a significant share in web development. As of recent estimates, Python holds about 20-30% of the programming language market, with Django being one of the top web frameworks.

• Node.js with Express:

• Market Share: Node.js has seen significant adoption due to its non-blocking architecture and scalability. Express is the most popular Node.js framework. Node.js, as a runtime, has gained a substantial share of the server-side JavaScript market, with estimates suggesting around 20-25% of the server-side language market.

• Ruby with Ruby on Rails:

• Market Share: Ruby on Rails was highly popular in the past, especially for startups and rapid development. However, its market share has declined somewhat as other technologies like Node.js and Python have gained traction. Ruby holds about 5-10% of the server-side language market, with Rails being the primary framework.

3. Databases

• MariaDB:

• Market Share: MariaDB has been growing as a popular open source alternative to MySQL. It holds a substantial portion of the open-source database market, with estimates suggesting around 15-20% market share for MariaDB and MySQL combined.

• MySQL Community Edition:

• Market Share: MySQL remains one of the most widely used databases globally. It holds a significant portion of the market, with estimates suggesting around 20-25% market share for MySQL and its forks.

• PostgreSQL:

• Market Share: PostgreSQL is known for its advanced features and is gaining popularity. It holds around 15-20% of the database market, with a growing trend in adoption due to its robustness and feature set.

These estimates can vary based on the source and the specific market segment being analyzed. However, they provide a general overview of the relative popularity and market share of these technologies.

필자

are nosql or mongo db or such dbs usable for web app

피티

Yes, NoSQL databases, including MongoDB, are commonly used for web applications and can be a good fit depending on the use case. Here’s a breakdown of their suitability and considerations:

Advantages of NoSQL Databases

1. Schema Flexibility:

• Dynamic Schema: NoSQL databases like MongoDB allow for a flexible schema, which means you can store different types of data in the same collection without needing to define a strict schema ahead of time. This is beneficial for applications where the data model may evolve over time.

2. Scalability:

• Horizontal Scaling: Many NoSQL databases are designed to scale out horizontally by distributing data across multiple servers. This can be advantageous for applications expecting large amounts of data or high traffic loads.

3. Performance:

• Optimized for Reads / Writes: NoSQL databases can be optimized for specific types of read and write operations, which can improve performance for certain workloads.

4. Ease of Development:

• Developer Friendly: The flexible nature of NoSQL databases can make development easier, especially for applications with rapidly changing requirements.

Common NoSQL Databases for Web Applications

1. MongoDB:

• Document-Oriented: Stores data in JSON like BSON (Binary JSON) format, which is useful for applications that require complex queries and indexing.

• Use Cases: Suitable for applications with hierarchical data, such as content management systems, real-time analytics, and data integration.

2. Cassandra:

• Column Family Store: Designed for high availability and scalability, Cassandra excels in scenarios requiring high write throughput and fault tolerance.

• Use Cases: Ideal for large-scale applications, such as recommendation engines, IoT data storage, and time-series data.

3. Redis:

• In Memory Key Value Store: Known for its speed and support for various data structures like strings, hashes, and lists.

• Use Cases: Often used for caching, session management, real-time analytics, and messaging.

4. CouchDB:

• Document Store: Similar to MongoDB but with a focus on multi-version concurrency control and reliable data storage.

• Use Cases: Useful for applications that require a high level of data consistency and reliability.

5. DynamoDB:

• Managed Key Value and Document Database: Provided by AWS, DynamoDB is fully managed and supports seamless scaling and high availability.

• Use Cases: Good for applications requiring consistent performance, scalability, and high availability, such as gaming, IoT, and mobile backends.

Considerations for Using NoSQL Databases

1. Consistency vs. Availability:

• CAP Theorem: NoSQL databases often prioritize availability and partition tolerance over consistency (eventual consistency). This trade-off should be considered based on your application’s requirements.

2. Complex Queries:

• Limitations: NoSQL databases may have limitations when it comes to complex queries and joins compared to traditional relational databases. They are better suited for certain types of queries and data models.

3. Data Integrity:

• ACID Transactions: Many NoSQL databases do not support ACID transactions in the same way as relational databases. For applications requiring strong transactional integrity, this should be carefully evaluated.

4. Ecosystem and Support:

• Tooling: Ensure that the NoSQL database you choose has sufficient tooling and community support for your needs, including integration with your chosen server-side language and frameworks.

Conclusion

NoSQL databases like MongoDB are definitely usable and often advantageous for web applications, particularly when you need schema flexibility, scalability, or specific performance optimizations. However, the choice between NoSQL and traditional SQL databases should be based on your application’s specific needs and the trade offs involved. For some use cases, a combination of NoSQL and SQL databases (polyglot persistence) can be an effective approach.

필자

back to the performance to resorce usage ratio? I know that C is 'bout thousand times faster than python in general computing, which could mean one language may consume way more than other. Could you compare each options given?

피티

Certainly! Comparing the performance and resource usage of server-side languages and frameworks can help you make an informed choice based on your application’s requirements. Here’s a comparison of the options given:

1. Java with Spring Boot

• Performance:

• Throughput: Java, especially with frameworks like Spring Boot, can handle a high volume of requests per second. Java’s performance is generally good for enterprise applications, though it can be memory-intensive.

• Resource Usage: Java applications can be more resource hungry due to the JVM (Java Virtual Machine), which uses memory for the heap, garbage collection, and JIT (Just In Time) compilation.

• Use Cases: Well suited for large scale, high performance applications where the overhead of the JVM is acceptable. Spring Boot provides tools to optimize performance, but resource usage is still higher compared to more lightweight languages.

2. Python with Django or Flask

• Performance:

• Throughput: Python is generally slower than compiled languages like C or Java. Django and Flask can handle a reasonable amount of traffic but may not be as performant as some other languages for high load scenarios.

• Resource Usage: Python applications typically use more CPU and memory compared to lower-level languages. Flask, being a micro-framework, might use fewer resources than Django, which has more built-in features.

• Use Cases: Python is ideal for rapid development and applications where development speed is prioritized over raw performance. It’s suitable for applications with moderate performance requirements.

3. Node.js with Express

• Performance:

• Throughput: Node.js is designed for non-blocking I/O operations, making it highly efficient for I/O bound tasks. It can handle many concurrent connections with relatively low overhead.

• Resource Usage: Node.js applications generally have lower memory consumption compared to Java. However, Node.js is single-threaded, which can limit its performance for CPU-bound tasks.

• Use Cases: Well-suited for real-time applications and scenarios requiring high concurrency and scalability. Node.js performs well for I/O heavy tasks but may require additional optimization for CPU bound operations.

4. Ruby with Ruby on Rails

• Performance:

• Throughput: Ruby on Rails is known for its convention-over-configuration approach, which can sometimes lead to slower performance compared to more optimized frameworks. Rails applications can be resource-intensive.

• Resource Usage: Ruby applications, including those using Rails, often have higher memory and CPU usage compared to languages like Python and Java.

• Use Cases: Suitable for applications where rapid development and ease of use are prioritized over performance. Rails provides many built-in features, which can increase resource usage.

Resource Usage Comparison

1. C/C++:

• Performance: Typically the fastest for general computing and system-level tasks due to direct compilation to machine code. C/C++ applications have minimal overhead and can be highly optimized.

• Resource Usage: Generally very efficient in terms of CPU and memory usage. Requires manual management of memory and resources.

2. Java:

• Performance: Good performance with optimization but can be slower than C/C++ due to JVM overhead. Performance can be optimized with tuning and modern JVM improvements.

• Resource Usage: More memory-intensive due to the JVM and garbage collection. Performance is generally acceptable for enterprise applications.

3. Python:

• Performance: Slower than compiled languages. Python’s performance is often improved with libraries like NumPy for numeric computations, but it’s still less efficient for CPU-bound tasks.

• Resource Usage: Generally higher memory and CPU usage compared to languages like C and Java. Suitable for applications where ease of development outweighs raw performance.

4. Node.js:

• Performance: Efficient for I/O-bound tasks and concurrent connections. Less performant for CPU-bound tasks due to its single-threaded nature.

• Resource Usage: Generally lower memory usage compared to Java. Performance is good for I/O-heavy operations.

5. Ruby:

• Performance: Slower compared to other languages for high-load applications. Performance optimizations are available but can be challenging.

• Resource Usage: Higher memory and CPU usage compared to Python and Node.js. Suitable for applications where development speed is a priority.

Summary

• Best Performance: C/C++ > Java > Node.js > Python > Ruby

• Resource Efficiency: C/C++ > Node.js > Java > Python > Ruby

Each language and framework has its strengths and trade-offs. Your choice should be based on a balance between performance, resource usage, development speed, and the specific requirements of your application.

필자

Focus on web app for now. What about how they handle on frugal environemten? I'm about to put three to four gigs of RAM for each since the server (hypervisor) will have 4C4T Xeon E3 with 16GB RAM DDR3 ECC Unbuffered.

피티

For a frugal environment with 16GB of RAM and a 4C4T Xeon E3 processor, managing resource usage efficiently is crucial. Here’s how each language and framework handles resource usage, focusing on web applications:

1. Java with Spring Boot

• Memory Usage:

• Java Applications: Typically have higher memory consumption due to the JVM. Spring Boot can add additional overhead compared to more lightweight frameworks.

• Heap Size: You might need to allocate a significant portion of the server’s RAM to accommodate the JVM and prevent out-of-memory issues.

• Optimization: JVM options and tuning can help manage memory usage, but Java applications generally require more RAM than other languages.

• Frugal Environment:

• Considerations: Java might not be the best choice for very limited RAM environments due to its higher memory footprint. However, for well-tuned applications and if the RAM is allocated properly, it can still be manageable.

2. Python with Django or Flask

• Memory Usage:

• Python Applications: Python applications generally use more memory than Node.js but less than Java. Django, being a full-featured framework, can be relatively heavy, while Flask is lighter.

• Optimization: Memory usage can be somewhat controlled by optimizing the application code and using efficient libraries.

• Frugal Environment:

• Considerations: Flask might be more suitable than Django for a frugal environment due to its lightweight nature. Python is manageable with 3-4GB of RAM per application, especially with Flask.

3. Node.js with Express

• Memory Usage:

• Node.js Applications: Generally have lower memory usage compared to Java. Node.js’s non-blocking I/O model helps reduce overhead.

• Efficiency: Node.js is designed to handle a large number of concurrent connections efficiently with relatively low memory usage.

• Frugal Environment:

• Considerations: Node.js is a good fit for a frugal environment due to its efficient memory usage. It handles concurrent connections well and can operate effectively within the given memory constraints.

4. Ruby with Ruby on Rails

• Memory Usage:

• Ruby Applications: Ruby on Rails can be resource-intensive, with higher memory usage compared to Python and Node.js. This is due to Rails’s feature-rich nature and the Ruby interpreter.

• Optimization: Performance can be improved with careful tuning, but Rails applications are generally heavier on memory.

• Frugal Environment:

• Considerations: Ruby on Rails might be challenging to run efficiently in a frugal environment with limited RAM. It would require careful resource management and possibly less concurrent usage to fit within the memory limits.

Resource Usage Summary for Frugal Environments

• Node.js (Express):

• Best Fit: Low memory usage, efficient for handling concurrent connections.

• RAM Usage: Suitable for environments with limited RAM (3-4GB per application).

• Python (Flask):

• Good Fit: Moderate memory usage, more suitable than Django for frugal environments.

• RAM Usage: Manageable with 3-4GB of RAM per application.

• Java (Spring Boot):

• Challenging Fit: Higher memory usage due to the JVM and Spring Boot’s overhead.

• RAM Usage: Can be demanding, might require careful tuning and more RAM.

• Ruby (Rails):

• Least Fit: Higher memory consumption and resource demands.

• RAM Usage: May be too resource-intensive for very frugal environments with limited RAM.

Recommendations

Given your server’s constraints (16GB of RAM total, with 3-4GB allocated per application):

1. Node.js (Express): Likely the best option due to its low memory footprint and efficient handling of concurrent connections.

2. Python (Flask): A good choice if you need Python’s capabilities, but ensure to optimize and manage memory carefully.

3. Java (Spring Boot): If you need Java, ensure to allocate sufficient RAM and optimize JVM settings. It’s possible but requires more careful management.

4. Ruby (Rails): Consider other frameworks or languages if memory usage is a critical constraint, as Rails may be too heavy.

For a frugal environment, prioritize frameworks and languages that offer efficient resource usage and can comfortably fit within the available memory constraints.

하 시바 고민이네. frugal environment 에서 잘 동작하면서도 robust하고 성능 좋은 (performance to resource usage ratio가 높은) 옵션이 참.ㅋㅋㅋㅋㅋ ㅜ

Node.js + Express가;

- resource efficient 함 (frugal environment), 메모리 + IO 말하는것임.

- 성능은 중치기임 (언어 성능은 좋으나 싱글뜨레드) -> 그래도 쓰루풋은 좋은 편임.

- 시장점유율은 꽤 높음 (20-25%) -> 해킹 역량 강화에 도움을 줄 수 있음. (특히 한국 공공기관 공기업쪽에 난발수준으로 많이 쓰임)

- 미들웨어: Nginx, PM2, Forever, http-server

이기 때문에 이걸로 선정하였다.

(자세한 고민 내용은 위의 접은글 컨설팅 내용으로 확인하면 된다. 영어울렁증 있으면 쭉 긁어서 지피티에게 번역해달라고 하면 될거다)

이제 노드 제이에스와 익스프레스에 대해 더 깊이 파보자.

필자

Based on your detailed hjelp, I chose Node.js + Express to go. MW would be (but havent fully decided yet) Nginx or http-server.

One of the reason why I've been asking so much is to be a better hacker with relative experience. Anyways.

Tell me about what web-was-db architecture would be with Node.js. Also, how client side script and server side script runs when an user access to the webpage. For example, the jsp flows like following:

- User request the landing page

- Apache recieves the request then asks Tomcat to run the server side script.

- Tomcat runs the jsp then finds out that an SQL querry need to be sent to DB.

- DB returns the result to the Tomcat

- Tomcat finalise the server side script then returns the result to Apache

- Apache sends CSS, HTML and JS if required to the user

- The user's web browser renders the page with given files.

피티

Great choice with Node.js and Express! Here’s a detailed explanation of how the architecture and request handling flow with Node.js, Express, and a database, along with how client-side and server-side scripts interact:

Web-WAS-DB Architecture with Node.js

1. Web Server (Nginx or http-server):

• Role: Acts as a reverse proxy or static file server.

• Function:

• Nginx: Commonly used for reverse proxying requests to the Node.js application, load balancing, and serving static files like CSS, JavaScript, and images.

• http-server: A simple static file server but less common for production environments compared to Nginx.

2. Web Application Server (Node.js + Express):

• Role: Handles the application logic, routing, and interactions with the database.

• Function:

• Express: Manages routing, middleware, and handles requests from the web server. It processes incoming HTTP requests, executes business logic, and interacts with the database as needed.

3. Database:

• Role: Stores and manages application data.

• Function: Handles queries from the Node.js application, returning results that are then processed and sent back to the client.

Request Handling Flow

1. User Requests a Webpage:

• The user’s web browser sends an HTTP request to the server for a specific URL (e.g., http://example.com/).

2. Web Server Receives the Request:

• Nginx/http-server: Receives the HTTP request from the browser. If using Nginx, it typically acts as a reverse proxy and forwards the request to the Node.js application server. If using http-server, it directly serves static files.

3. Node.js + Express Processes the Request:

• Express Middleware: Handles the request, executes any middleware functions (e.g., authentication, logging), and routes the request to the appropriate route handler.

• Route Handler: Executes the necessary server-side logic, which may involve querying the database.

4. Database Interaction:

• Database Query: The Express route handler interacts with the database using a database driver or ORM (Object-Relational Mapper). For example, if using MongoDB, it might use the MongoDB Node.js driver or Mongoose.

• Database Response: The database returns the query results to the Express application.

5. Response Processing:

• Express Response: The Express route handler processes the database results, generates the appropriate HTML, JSON, or other responses, and sends them back to the web server.

6. Web Server Sends Response to Browser:

• Nginx/http-server: Forwards the response from Express to the client’s web browser. If Nginx is used, it might also handle caching or compression of the response.

7. Browser Renders the Page:

• Client-Side Script Execution:

• HTML/CSS/JS: The browser processes the HTML, CSS, and JavaScript received from the server.

• JavaScript Execution: Client-side JavaScript is executed in the browser, handling dynamic content, user interactions, and making further AJAX requests if needed.

Detailed Flow Example

1. User Request:

• URL: http://example.com/products/123

• Action: The user requests a product page with ID 123.

2. Nginx/Proxy:

• Nginx: Receives the request and forwards it to the Node.js server.

3. Node.js + Express:

• Express Routing: The Express router directs the request to the route handler for /products/:id.

• Database Query: The route handler queries the database for product details with ID 123.

4. Database Interaction:

• Query: SELECT * FROM products WHERE id = 123;

• Response: The database returns the product data.

5. Express Response:

• Processing: The Express route handler formats the data into an HTML page or JSON object.

• Send Response: Express sends the HTML or JSON response back to Nginx.

6. Nginx to Browser:

• Response: Nginx forwards the HTML, CSS, and JavaScript to the browser.

7. Browser Rendering:

• HTML/CSS: The browser renders the page layout and styles.

• JavaScript: Any client-side scripts are executed, handling dynamic content and interactions.

Key Points

• Node.js + Express: Handles application logic, routing, and database interactions efficiently. It processes requests and responses asynchronously, which is beneficial for handling concurrent connections.

• Nginx: Often used for reverse proxying and serving static content, reducing the load on the Node.js application server.

• Database Interaction: Node.js communicates with the database to retrieve or store data, with the results used to generate the response sent to the client.

This architecture ensures that your application is scalable and efficient, with Node.js and Express handling the core application logic and Nginx or http-server managing static content and request routing.

필자

Okay, good job. Then, I'm going to use:

- Latest Ubuntu Server LTS, whatever that would be

- Nginx, also latest version

- Node.js with Express as WAS

I like challenging stuff, thus maybe will use PostreSQL (is the name correct?) since I am way familiar with mysql/mariadb, too much if you say so. Are there any difference with mariaDB and that Postre thingy, alongside the web app usage?

피티

Using PostgreSQL (often referred to as “Postgre” for short) is a great choice for expanding your database experience, especially if you’re already familiar with MySQL/MariaDB. Here’s a comparison of PostgreSQL and MariaDB, highlighting their differences and considerations for web application usage:

PostgreSQL vs. MariaDB

**1. General Overview

• PostgreSQL:

• Type: Object-relational database management system (ORDBMS).

• Features: Known for its advanced features, extensibility, and standards compliance. Supports complex queries, data integrity, and custom data types.

• Strengths: ACID compliance, rich SQL capabilities, advanced indexing, support for JSONB, and full-text search.

• MariaDB:

• Type: Relational database management system (RDBMS).

• Features: A fork of MySQL, designed to be fully compatible with MySQL while adding new features and improvements.

• Strengths: High performance, ease of use, and compatibility with MySQL applications.

**2. SQL Features and Compliance

• PostgreSQL:

• Advanced SQL Support: PostgreSQL is known for its comprehensive SQL compliance and support for advanced SQL features like window functions, common table expressions (CTEs), and recursive queries.

• Extensibility: Supports custom functions, data types, and operators, allowing you to extend the database capabilities as needed.

• MariaDB:

• SQL Features: MariaDB includes many features from MySQL but also offers additional capabilities like the Aria storage engine and support for more storage engines.

• Compatibility: Generally aims to be compatible with MySQL, but some advanced features and syntax may differ.

**3. Performance and Scalability

• PostgreSQL:

• Performance: Strong performance for complex queries and large datasets. Supports indexing, partitioning, and parallel query execution to optimize performance.

• Scalability: Capable of handling large volumes of data and high transaction rates. Provides robust replication and sharding options.

• MariaDB:

• Performance: Known for high performance, especially with read-heavy workloads. Includes performance optimizations like the InnoDB and TokuDB storage engines.

• Scalability: Scalable with support for replication, clustering (Galera Cluster), and partitioning.

**4. Data Integrity and ACID Compliance

• PostgreSQL:

• ACID Compliance: PostgreSQL is fully ACID-compliant, ensuring data integrity and consistency through transactions.

• MVCC: Uses Multi-Version Concurrency Control (MVCC) to handle concurrent transactions and avoid locking issues.

• MariaDB:

• ACID Compliance: Also ACID-compliant, with support for transactions and consistent data integrity.

• Storage Engines: InnoDB, the default storage engine, provides transaction support and ACID compliance.

**5. JSON and Document Storage

• PostgreSQL:

• JSONB Support: PostgreSQL has robust support for JSON and JSONB (binary JSON) data types, allowing for efficient storage and querying of semi-structured data.

• Flexibility: Supports document-oriented operations and indexing on JSON data.

• MariaDB:

• JSON Support: Provides JSON data types and functions but generally has less mature support compared to PostgreSQL.

• Use Cases: Suitable for applications requiring JSON data storage but with fewer advanced features than PostgreSQL.

**6. Community and Ecosystem

• PostgreSQL:

• Community: Has an active and growing community, with extensive documentation and third-party tools available.

• Ecosystem: Offers a wide range of extensions and tools for various use cases, including GIS, full-text search, and more.

• MariaDB:

• Community: Strong community support, with ongoing development and contributions. Backed by the MariaDB Foundation.

• Ecosystem: Includes tools and extensions like MariaDB ColumnStore for analytics and MariaDB MaxScale for database management.

Conclusion

• PostgreSQL: If you want to work with advanced features, complex queries, and custom data types, PostgreSQL is a powerful choice. It offers a rich set of features and is well-suited for applications requiring complex data operations and extensibility.

• MariaDB: If you prefer a database system that is highly compatible with MySQL and offers good performance for a variety of workloads, MariaDB is a strong option. It provides familiarity and ease of use with additional enhancements over MySQL.

Given your background and interest in challenging tasks, PostgreSQL might offer the opportunity to explore advanced features and functionality. However, MariaDB is also a solid choice if you value compatibility with MySQL and a familiar environment.

필자

I saw many sites (that I performed the pentest) utilising the same page with differing JSON to render the result. (Great work for reducing traffic but in result the go-back function not working as others) I need to be used to that (morely need to be familiar with JSON ping ponging) In that case, Postgre would do better, is that right

피티

Yes, PostgreSQL’s support for JSON and JSONB can be quite advantageous in scenarios where you need to handle and manipulate JSON data effectively. Here’s why PostgreSQL might be better suited for such use cases:

Advantages of PostgreSQL for JSON Handling

1. Advanced JSON Functions and Operators:

• JSONB Support: PostgreSQL’s JSONB type allows for efficient storage and querying of JSON data. It supports indexing, which can significantly improve the performance of queries involving JSON data.

• Rich Functionality: PostgreSQL provides a wide range of functions and operators to manipulate JSON data, such as ->, ->>, #>, #>>, and jsonb_set. These allow for sophisticated querying and updating of JSON fields.

2. Indexing and Performance:

• GIST/GIN Indexes: PostgreSQL supports Generalized Inverted Index (GIN) and Generalized Search Tree (GIST) indexes for JSONB data, which can optimize queries on JSON fields and improve performance.

• Efficient Queries: You can perform complex queries and filtering on JSON data, making it easier to work with dynamic and structured data.

3. Data Flexibility:

• Document Storage: PostgreSQL can handle both structured and semi-structured data, allowing you to store and retrieve JSON documents with flexibility.

• Custom Functions: You can define custom functions and procedures to work with JSON data, tailoring the database to your specific needs.

4. Schema Evolution:

• Dynamic Schema: With JSONB, you can manage evolving schemas more easily. You can add or remove fields in your JSON documents without altering the database schema.

Handling JSON Ping-Ponging

In the context of JSON “ping-ponging,” where a single page uses different JSON responses to render content dynamically:

• Single Page Application (SPA): Using JSON to update content dynamically on the same page can be efficiently managed with PostgreSQL’s JSON capabilities. You can structure your data in JSONB and perform updates or fetches as needed.

• Caching and Performance: PostgreSQL’s support for indexing JSONB fields can help in optimizing queries that retrieve or filter JSON data, improving overall performance.

• Complex Queries: If your application needs to perform complex queries or aggregations on JSON data, PostgreSQL’s advanced querying capabilities will be beneficial.

Summary

For applications heavily reliant on JSON data manipulation, dynamic content updates, and efficient querying, PostgreSQL’s JSONB support offers robust features and performance advantages. It provides a comprehensive set of tools for working with JSON data, making it a strong choice for scenarios where JSON ping-ponging or complex JSON data handling is required.

If you need to become familiar with these JSON handling techniques and optimize performance in such use cases, PostgreSQL will offer the advanced functionality and flexibility needed.

필자

Sounds lovely, I am going to use Postgre. It will be great opportunity to learn new stuffs. Elaborate on ACID, I've got no idea what that is.

피티

ACID is a set of properties that ensure reliable transactions in a database system. It stands for Atomicity, Consistency, Isolation, and Durability. Understanding these properties is crucial for maintaining data integrity and reliability in database operations. Here’s a detailed look at each component of ACID:

1. Atomicity

• Definition: Atomicity ensures that a transaction is treated as a single, indivisible unit of work. This means that either all operations within the transaction are completed successfully, or none of them are applied. If any part of the transaction fails, the entire transaction is rolled back to its initial state.

• Example: Consider a banking transaction where money is transferred from Account A to Account B. Atomicity ensures that either both the debit from Account A and the credit to Account B occur, or neither happens if an error occurs.

2. Consistency

• Definition: Consistency ensures that a transaction brings the database from one valid state to another. The database must satisfy all predefined rules, constraints, and data integrity conditions before and after the transaction.

• Example: If a transaction involves updating the balance of accounts, consistency ensures that all business rules are followed (e.g., the total amount of money in the system remains the same if no money is created or destroyed).

3. Isolation

• Definition: Isolation ensures that concurrent transactions do not interfere with each other. Each transaction should operate as if it is the only transaction being executed. The results of a transaction should not be visible to other transactions until the transaction is complete.

• Example: If two transactions are attempting to update the same record simultaneously, isolation ensures that the operations do not overlap or cause conflicts. For instance, two users attempting to withdraw money from the same bank account should not result in an overdraft.

• Levels of Isolation:

• Read Uncommitted: Allows transactions to read uncommitted changes from other transactions (lowest level of isolation).

• Read Committed: Ensures that transactions only see committed changes.

• Repeatable Read: Ensures that if a transaction reads a record, subsequent reads in the same transaction will see the same data.

• Serializable: Ensures the highest level of isolation, making transactions appear as if they are executed sequentially.

4. Durability

• Definition: Durability ensures that once a transaction is committed, its changes are permanent and survive any subsequent system failures or crashes. The changes made by the transaction are stored in non-volatile memory.

• Example: After a successful transaction that transfers money between accounts, the changes must be saved to disk. Even if the system crashes immediately afterward, the transaction’s effects should still be present when the system is restored.

ACID in Practice

• Transaction Management: Databases use mechanisms such as logging and checkpointing to implement ACID properties. For example, write-ahead logging (WAL) ensures that changes are recorded before they are applied, providing durability.

• Concurrency Control: Techniques like locking and multi-version concurrency control (MVCC) help manage isolation by ensuring that concurrent transactions do not interfere with each other.

• Data Integrity: Constraints, triggers, and rules ensure consistency by enforcing data integrity during transactions.

Importance of ACID

• Reliability: ACID properties ensure that database transactions are processed reliably and that the database remains in a consistent state even in the face of errors or system failures.

• Data Integrity: By enforcing these properties, databases maintain accurate and valid data throughout their operations.

Summary

ACID is essential for maintaining the integrity and reliability of transactions in a database. By ensuring that transactions are atomic, consistent, isolated, and durable, ACID properties help prevent data corruption, inconsistencies, and loss, making them fundamental to robust database management systems like PostgreSQL.

필자

Ah, did not know that that was acronym for that. Thx.

피티

You’re welcome! ACID principles are fundamental to understanding how databases maintain integrity and reliability. If you have any more questions about databases or anything else, feel free to ask!

필자

Yeah I leant about that back before when I was in college. Kinda forgot about it.

피티

It’s easy to forget some of those details over time, especially with everything else you’ve been working on. If you ever need a refresher or have any more questions, just let me know!

필자

So, with my plan (list up to be sure we're on the same page), where the WAF fits in? I remember that someone said about WAF functionality that can be added to Nginx or something.

피티

Sure, let’s list your plan and then discuss where a Web Application Firewall (WAF) fits in:

Your Plan

1. Operating System: Latest Ubuntu Server LTS

2. Web Server: Nginx (latest version)

3. Application Server: Node.js with Express

4. Database: PostgreSQL

Where a WAF Fits In

A Web Application Firewall (WAF) is designed to protect your web applications from various types of attacks and vulnerabilities. It operates by filtering and monitoring HTTP requests and responses between the client and the server. Here’s how a WAF fits into your architecture:

1. Placement in Architecture:

• Reverse Proxy Position: Typically, a WAF is placed in front of your web server (Nginx) or as a reverse proxy. This means it intercepts and inspects incoming requests before they reach your application server.

• Integration: For Nginx, you can integrate a WAF module directly into Nginx or use an external WAF solution that works in conjunction with Nginx.

2. WAF Functionality:

• Request Filtering: The WAF filters incoming HTTP requests based on predefined rules and patterns to block malicious requests. This includes SQL injection, cross-site scripting (XSS), and other common web attacks.

• Traffic Analysis: Analyzes incoming traffic for suspicious activity and potential threats, blocking or alerting based on configured security policies.

• Protection Against Vulnerabilities: Helps protect against known vulnerabilities and zero-day exploits by inspecting both requests and responses.

3. WAF Integration with Nginx:

• ModSecurity: One of the most commonly used WAFs with Nginx is ModSecurity. It’s an open-source WAF that can be integrated with Nginx through the ModSecurity-nginx connector. It provides robust protection against a wide range of web threats.

• NAXSI: Another option is NAXSI (Nginx Anti XSS & SQL Injection), a WAF module designed specifically for Nginx. It provides a rule-based approach to filter malicious requests.

4. Benefits of Using a WAF:

• Enhanced Security: Adds an additional layer of security to protect your web applications from common and advanced threats.

• Regulatory Compliance: Helps in meeting regulatory requirements by providing security features required by standards like PCI-DSS.

• Customizable Rules: Allows for the creation and management of custom rules tailored to your specific application needs.

Summary

In your setup, a WAF would be placed between the external traffic and your Nginx web server. It will inspect and filter HTTP requests and responses to ensure that only safe traffic reaches your Node.js application server. Integrating a WAF like ModSecurity or NAXSI with Nginx will help enhance the security of your web application by protecting against various types of attacks and vulnerabilities.

필자

What do you think about dedicated WAF server? With given situation (limited computer resource), the discrete WAF will worth or not isn't sure yet.

피티

Using a dedicated WAF server can offer several benefits, but it also comes with its own set of trade-offs, especially in a resource-limited environment. Here’s a detailed look at the considerations for using a dedicated WAF server:

Benefits of a Dedicated WAF Server

1. Enhanced Security:

• Isolation: A dedicated WAF server isolates the WAF functionality from your application and web servers, potentially improving security by reducing the risk of a compromise affecting multiple components.

• Focused Resources: It allows the WAF to operate with dedicated resources, ensuring that it can perform extensive checks and analysis without impacting the performance of your application servers.

2. Scalability and Flexibility:

• Independent Scaling: You can scale the WAF server independently of your application servers. If traffic increases or more advanced protection is needed, you can upgrade or scale the WAF server without affecting the application servers.

• Custom Configuration: It allows for more tailored configuration and management of the WAF rules and policies, as you have a dedicated environment for tuning and maintenance.

3. Advanced Features:

• Full WAF Capabilities: Dedicated WAF solutions often come with advanced features and management tools that might not be available or as easily configured in an integrated setup.

Drawbacks of a Dedicated WAF Server

1. Resource Utilization:

• Additional Resources: A dedicated WAF server requires additional computational resources, including CPU, memory, and storage, which might be limited in your environment.

• Overhead: It introduces additional network latency and complexity, as traffic must pass through the WAF server before reaching the web and application servers.

2. Complexity:

• Configuration and Maintenance: Managing a dedicated WAF server adds complexity to your infrastructure. It requires additional configuration, monitoring, and maintenance efforts.

• Cost: Even if the hardware is already available, a dedicated WAF server may require more software licenses or services, adding to the overall cost.

3. Performance Impact:

• Latency: Introducing a dedicated WAF server can add latency to request processing, though this can be minimized with proper tuning and sufficient hardware resources.

Alternatives to a Dedicated WAF Server

1. Integrated WAF Solutions:

• Nginx WAF Modules: As mentioned, using WAF modules like ModSecurity or NAXSI directly integrated with Nginx can provide a good level of protection without requiring a separate server.

• Cloud-Based WAF: Consider cloud-based WAF services like Cloudflare, AWS WAF, or Azure Application Gateway. These services offload the WAF functionality to the cloud, reducing the burden on your local resources and often providing a high level of protection with minimal configuration.

2. Virtualized WAF:

• VM-Based WAF: If hardware resources are a concern but you still want a dedicated WAF, consider running the WAF as a virtual machine on your existing hypervisor. This allows you to allocate resources as needed without requiring a separate physical server.

Conclusion

Given your resource constraints, a dedicated WAF server might not be the most practical solution unless you have sufficient hardware and can manage the added complexity. For your situation, integrating a WAF directly with Nginx or using a cloud-based WAF service might be more efficient and cost-effective. These options can still provide robust protection while making better use of your limited resources.

필자

Oh if I did not mention, the web service will be only available within the intranet. If I need to access via the internet, I will use VPN (already set and running) service. Also, the user will be limited to me.

피티

Given that your web service will be restricted to your intranet and you’ll be accessing it through a VPN, the need for a dedicated WAF server is reduced. Here’s how this setup impacts your decision:

1. Security Considerations

• Intranet Access: Since the service is only accessible within your intranet and not exposed directly to the public internet, the immediate risk of external attacks is lower.

• VPN Usage: VPNs provide an additional layer of security by encrypting traffic between your device and the VPN server. This helps protect your data from eavesdropping and tampering.

2. WAF Necessity

• Reduced Risk: With the service restricted to your intranet and access controlled via VPN, the need for an additional WAF server is less critical. Most attacks would be from inside your network, and if the network is secure, the risk is minimized.

• Integrated WAF Options: Integrating a WAF directly with Nginx (e.g., using ModSecurity or NAXSI) should be sufficient to handle common web application threats if you decide to include one.

3. Resource Management

• Resource Constraints: Given your limited hardware resources, using an integrated WAF or relying on existing security measures (like firewall rules and VPN) will be more efficient. A dedicated WAF server might not be necessary and could add unnecessary overhead.

4. Best Practices

1. Internal Security Measures:

• Firewall Rules: Configure internal firewall rules to restrict access between different segments of your network (e.g., between your application and database servers). This can help contain any potential breaches.

• Network Segmentation: Use VLANs or other network segmentation techniques to further isolate different parts of your network. This limits the potential spread of any compromise.

2. Application Security:

• Input Validation: Implement robust input validation and sanitization in your Node.js application to protect against common vulnerabilities like SQL injection and XSS.

• Secure Coding Practices: Follow secure coding practices, including regular code reviews and security testing, to identify and fix vulnerabilities.

3. Monitoring and Logging:

• Access Logs: Maintain access logs for your Nginx server and Node.js application. Monitor these logs for unusual activity or potential security incidents.

• Performance Monitoring: Implement performance monitoring to keep an eye on resource usage and identify potential bottlenecks or issues.

4. Backup and Recovery:

• Regular Backups: Perform regular backups of your PostgreSQL database and other critical data. Ensure that backups are stored securely and tested periodically for integrity.

• Disaster Recovery Plan: Develop a disaster recovery plan to address potential scenarios where data or services are lost or compromised. This should include steps for restoring from backups and mitigating any damage.

5. Security Updates:• Patch Management: Keep all components of your stack (Ubuntu Server, Nginx, Node.js, PostgreSQL) up-to-date with the latest security patches and updates.

6. VPN Security Enhancements:

• Strong Authentication: Use strong authentication methods (e.g., multi-factor authentication) for accessing the VPN.

• Encryption: Ensure that VPN connections are encrypted using robust protocols to protect data in transit.

Summary

Given that your web service is restricted to your intranet and access is controlled via VPN, a dedicated WAF server may not be necessary. Instead, focus on integrating a WAF with Nginx if you wish to have an additional layer of security. Prioritize internal security measures, robust application practices, and regular monitoring to ensure the safety and performance of your web service. These practices will help you maintain a secure and efficient environment within your resource constraints.

필자

with your answer, that makes sense. I'm going to use integrated WAF.

피티

That sounds like a good plan! Using an integrated WAF with Nginx will help you maintain a streamlined setup while still providing an added layer of security for your web application. If you need any help with configuring the WAF or anything else, just let me know!

필자

Ah, also, I'm going to use different database with each types of data stored. (Financial, Vehicle Service History, User data and more) Do you think seperating (i.e. creating discrete DB server VMs) the servers would be better or a single VM with multiple databases would be secure enough?

피티

The decision between using separate database servers (or VMs) versus a single VM with multiple databases depends on various factors, including security, performance, management, and resource constraints. Here’s a detailed comparison to help you decide:

Separate Database Servers (Discrete VMs)

Pros:

1. Enhanced Security:

• Isolation: Each database server is isolated from others, so a breach in one database does not compromise the others. This isolation can be crucial if different databases have different security requirements or if one database contains highly sensitive information.

• Access Control: Easier to implement and enforce different access controls and security policies for each database server.

2. Performance:

• Dedicated Resources: Each database server can be tuned and optimized for its specific workload, potentially improving performance.

• Reduced Contention: Separation reduces the risk of resource contention, as each VM can have its dedicated CPU, memory, and storage resources.

3. Scalability:

• Independent Scaling: You can scale each database server independently based on its needs. If one database experiences higher load, you can scale that server without affecting others.

4. Fault Isolation:

• Reduced Impact: Issues or failures in one database server are less likely to affect the others, leading to improved overall system stability.

Cons:

1. Resource Overhead:

• Increased Resource Usage: Running multiple VMs incurs additional overhead in terms of CPU, memory, and storage resources. This can be a concern if resources are limited.

2. Management Complexity:

• Increased Complexity: Managing multiple database servers can be more complex, requiring additional configuration, monitoring, and maintenance.

Single VM with Multiple Databases

Pros:

1. Resource Efficiency:

• Lower Overhead: Fewer VMs mean less resource overhead. You can utilize your hardware more efficiently by running multiple databases on a single VM.

2. Simplified Management:

• Easier Administration: Managing a single VM is generally simpler than managing multiple VMs. This includes updates, backups, and monitoring.

3. Cost-Effective:

• Reduced Costs: With fewer VMs, you reduce the costs associated with virtualization and hardware resources.

Cons:

1. Security Risks:

• Shared Environment: If the VM is compromised, all databases within it are potentially at risk. Security breaches in one database can affect the others if the VM itself is breached.

• Complex Access Control: Managing access and permissions for multiple databases within a single VM can be more complex.

2. Performance Issues:

• Resource Contention: Multiple databases sharing the same VM may lead to resource contention, affecting performance if not properly managed.

3. Scaling Challenges:

• Limited Flexibility: Scaling resources for one database affects all databases on the VM. You may need to over-provision resources to ensure that all databases perform well.

Recommendations

• For High Security Needs: If you have databases with significantly different security requirements or high-value data, using separate database servers (VMs) is generally recommended. This isolation helps in maintaining security and managing risk.

• For Resource Constraints: If resources are limited and you need to maximize efficiency, a single VM with multiple databases can be a viable option, provided that you implement strong security measures and monitor resource usage carefully.

• Hybrid Approach: Consider a hybrid approach where critical or high-value databases are isolated in separate VMs, while less sensitive or lower-priority databases are grouped together on a single VM. This can balance security, performance, and resource usage.

Conclusion

Given your context and the potential sensitivity of the data (e.g., financial and user data), using separate database servers for different types of data can provide better security and performance isolation. However, if resource constraints are a significant concern, carefully managing a single VM with multiple databases can be effective if proper security measures and resource management practices are in place.

필자

The priorities to me would be like:

0. Confidenciality

1. Integrity

2. Cost

3. Availability

With that information, what would you suggest

피티

Given your priorities, here’s a tailored recommendation for managing your database setup:

1. Confidentiality (Highest Priority)

Recommendation: Separate Database Servers (Discrete VMs)

• Reasoning: Since confidentiality is your top priority, isolating databases on separate servers (VMs) provides the highest level of security. Each database server can be secured independently, reducing the risk that a breach in one database would compromise others.

• Additional Measures: Implement strong access controls, encryption (both at rest and in transit), and regular security audits for each database server.

2. Integrity

Recommendation: Separate Database Servers

• Reasoning: Using separate VMs helps maintain the integrity of each database by isolating them from one another. If one database server is compromised, the integrity of others remains intact.

• Additional Measures: Implement regular backups and use checksums or hash functions to ensure data integrity.

3. Cost

Recommendation: Single VM with Multiple Databases

• Reasoning: If cost is a significant concern, consolidating multiple databases on a single VM can reduce hardware and management expenses. However, this should be weighed against the potential trade-offs in security and performance.

• Cost Management: Optimize the single VM’s resources and consider cloud-based solutions where you can scale resources based on need, which might offer cost-effective options.

4. Availability

Recommendation: Single VM with Multiple Databases (if cost is a concern)

• Reasoning: For availability, having a single VM with multiple databases can simplify management and scaling. However, if separate VMs are used, ensure that each VM is configured for high availability and has appropriate failover mechanisms.

• High Availability: Implement monitoring and automated failover solutions for database servers to maintain availability.

Summary

Given your priorities, the optimal solution would be to use separate database servers (VMs) to ensure maximum confidentiality and integrity. This approach aligns with your highest priorities but may increase costs. If budget constraints are significant, carefully manage a single VM with multiple databases while implementing strong security measures to protect confidentiality and integrity. This approach balances cost and security, though it may require more stringent monitoring and management.

If you choose the separate database servers approach, you might consider using a hybrid model where the most critical databases (e.g., financial) are isolated in separate VMs, while less sensitive data is consolidated if resource constraints are an issue.

필자

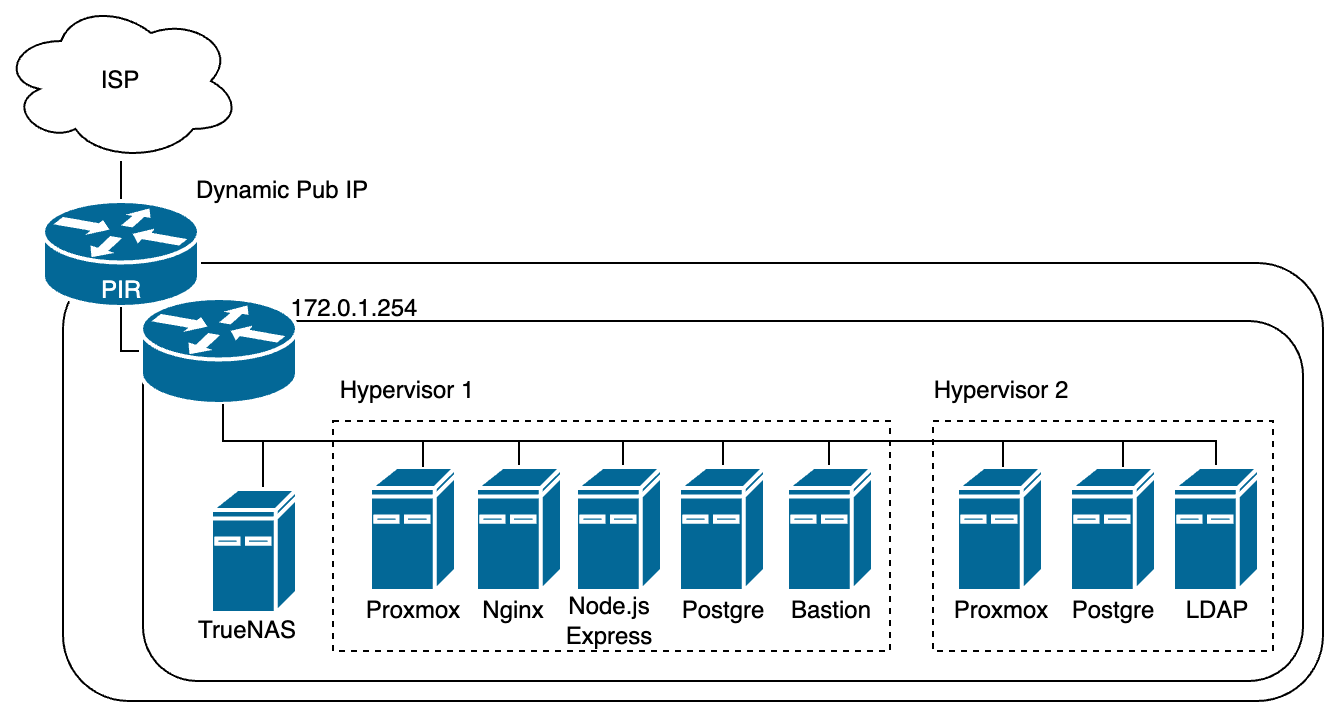

that hybrid option is great idea. Maybe I'll have two hypervisors,

- Hypervisor 1 : Web, WAS, DB (not sensitive DB)

- Hypervisor 2 : DB (Sensitive data with discrete VMs)

피티

That sounds like a solid plan! This hybrid approach allows you to balance security and resource constraints effectively:

Hypervisor 1: Web, WAS, and Less Sensitive DB

• Web and WAS: Hosts your web server (Nginx) and application server (Node.js with Express), which will be handling less sensitive data and public-facing services.

• Less Sensitive DB: Manages databases that don’t contain highly sensitive information. This setup maximizes resource efficiency while still providing a reasonable level of security for less critical data.

Hypervisor 2: Discrete VMs for Sensitive Data

• Sensitive DBs: Runs discrete VMs for databases that contain sensitive data, ensuring that each database is isolated from the others. This isolation provides enhanced security and confidentiality for your most critical data.

Benefits of This Approach

1. Security: By isolating sensitive databases on a separate hypervisor, you ensure that critical data is protected even if other parts of your infrastructure are compromised.

2. Resource Management: Hypervisor 1 can be optimized for performance and resource efficiency, while Hypervisor 2 can be tuned for security and isolation.

3. Cost-Effectiveness: Balances the cost of running multiple VMs with the need for high-security measures, providing flexibility in managing your resources.

Additional Considerations

• Network Segmentation: Ensure that Hypervisor 1 and Hypervisor 2 are properly segmented on the network to prevent unauthorized access between them.

• Backup and Recovery: Implement a robust backup and recovery plan for both hypervisors to ensure data protection and availability.

• Monitoring and Alerts: Set up monitoring and alerting systems to track performance and security issues on both hypervisors.

If you have any more questions or need further guidance on setting this up, feel free to ask!

필자

So now, there will be multiple (logical) servers. I'm going to add a Bastion host to connect SSH to those servers. Would I need an Radius server or hardcoding credentials into server side scripts will be okay?

피티

Using a Bastion host is a good practice for managing SSH access to your servers, especially when dealing with multiple servers and a focus on security. Here’s a breakdown of the two approaches you mentioned:

1. RADIUS Server

Pros:

• Centralized Authentication: RADIUS (Remote Authentication Dial-In User Service) provides a centralized authentication system. You can manage user credentials and access policies from a single location.

• Scalability: Easier to manage and scale user access across multiple servers.

• Auditing and Reporting: Often provides better auditing and reporting capabilities for user authentication activities.

• Integration: Can integrate with various authentication methods like multi-factor authentication (MFA), which adds an extra layer of security.

Cons:

• Complexity: Setting up and maintaining a RADIUS server can be complex, especially if you’re not already familiar with it.

• Resource Overhead: Requires additional hardware or virtual resources to run the RADIUS server.

2. Hardcoding Credentials into Server-Side Scripts

Pros:

• Simplicity: Easier to implement and manage if you’re only dealing with a few servers.

• No Additional Infrastructure: Does not require the setup of an additional RADIUS server or similar infrastructure.

Cons:

• Security Risks: Hardcoding credentials in server-side scripts is generally considered a security risk. If an attacker gains access to the script, they could potentially extract credentials and compromise your systems.

• Lack of Scalability: Managing and rotating credentials becomes cumbersome as the number of servers or users increases.

• No Centralized Management: Credentials are managed on a per-script basis, which can lead to inconsistent policies and harder auditing.

Recommendation

For better security and management, using a RADIUS server or similar centralized authentication system is generally recommended. It provides a more secure, scalable, and manageable approach for handling authentication across multiple servers.

Implementation Tips

• Bastion Host: Ensure that your Bastion host is configured securely, with strong access controls and monitoring.

• Multi-Factor Authentication (MFA): Consider using MFA for accessing the Bastion host and RADIUS server to add an extra layer of security.

• Regular Audits: Perform regular audits and reviews of your authentication and access control policies to ensure they meet your security requirements.

Summary

Given your focus on security and the need to manage access to multiple servers, using a RADIUS server (or similar centralized authentication service) is the recommended approach. It enhances security, simplifies management, and provides better scalability compared to hardcoding credentials into server-side scripts.

필자

Could you list all the possible options for credential management? I'm aware of LDAP, SSO, RADIUS and hard-coding. Focus on free options

피티

Certainly! Here’s a list of credential management options, focusing on free or open-source solutions:

**1. LDAP (Lightweight Directory Access Protocol)

Description:

• LDAP is a protocol used to access and manage directory services. It provides a centralized way to store and manage user credentials and access permissions.

Free/Open-Source Implementations:

• OpenLDAP: A widely used open-source implementation of the LDAP protocol. OpenLDAP Website

• Apache Directory Server: An open-source directory server built on LDAP and other protocols. Apache Directory Server

**2. RADIUS (Remote Authentication Dial-In User Service)

Description: